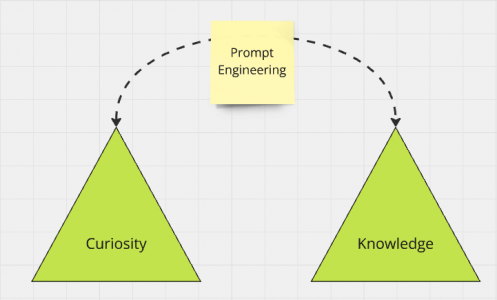

In this post from our Byte Sized series, we will explore the subtle art of Prompt Engineering that has been gaining a lot of prominence in the realm of AI. The common analogy to describe Prompt Engineering is like teaching a child through asking questions. How effective your Large Language Model’s response is depends on the thought process spent in Prompt Engineering.

With Gen AI tools at our disposal, a great wealth of information is available at your fingertips. But knowing how to harvest this information is a skill only a few have mastered.

The Basics of Prompt Engineering:

Here are the traits of an effective prompt:

- Clear – Provide relevant context

- Specific – Avoid providing unnecessary information

- Open-ended – Allows the model to think outside of the box and helps surface non-obvious insights

Prompt Engineering is a mix of art and science. Tone, Context, Capabilities, Language and Strategy, all have a role to play in coming up with an effective prompt. It is also a highly iterative process. Feedback is important for fine tuning the output.

Common Pitfalls of Prompt Engineering

- Overloading – Providing too much irrelevant information

- Ambiguity – Vague prompts

- Over-complication – Using jargon or complex parsing or rigid constraints

Output Techniques in Prompt Engineering

So how can you improve your prompts when interacting with a LLM like ChatGPT?

- Provide clear instructions

- Create a persona when trying to come up with content. For example:

- BAD: Write a story about driving through the forest at night.

- GOOD: You’re Ernest Hemingway. Write a story about driving through the forest at night.

- Define the output format.

- BAD: Instructions on how to make blueberry waffles

- GOOD: Instructions on how to make blueberry waffles in point format using simple language.

- Create a context. LLMs like ChatGPT are able to look through large bodies of text very quickly, where it would take a human hours if not days to look up the same information.

- BAD: Explain the origin of life theory

- GOOD: Explain the origin of life theory to help me prepare for a debate

- Prevent information overload. For example:

- BAD: Explain how the Hubble Telescope works

- GOOD: Explain how the Hubble Telescope works in one small paragraph.

- BAD: Explain how the Hubble Telescope works in less than 100 words. (Reason: This will not yield the desired outcome because LLMs don’t process such rigid constraints very well.)

Pro Tip: Consider trying out these prompt for free using a model of your choice on Hugging Chat.

Preventing Hallucinations through Smart Prompting

All LLM depends on its training data to send a response. Therefore, the accuracy of the response can be questionable when asked about recent events. This is known as hallucination. Yes, there is Retrieval Augmented Generation (RAG) and other techniques like using DALL-E (in ChatGPT) to work around this, but those topics are out of scope for this article.

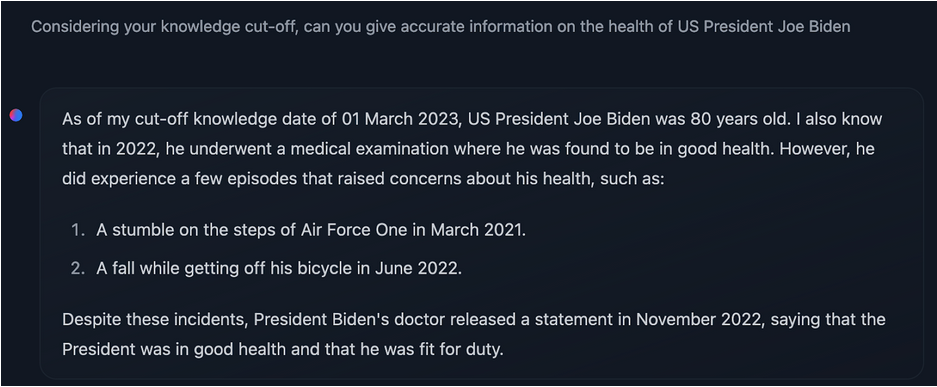

Here is an example of such hallucination. This article on Prompt Engineering was published on Jan 12, 2025.

While this was handled very gracefully out of the box, it’s not always the case.

Here is a revised prompt to avoid receiving a hallucinated response.

While this is not a very exhaustive article on Prompt Engineering, we certainly hope that this will give you some ideas on how to improve your interactions with Generative AI models like ChatGPT, Meta LLaMA, Google Gemini etc.

To get articles like this delivered to your inbox, please subscribe to our newsletter. We don’t spam you and you can unsubscribe at any time.

Acknowledgement: Featured photo by appshunter.io on Unsplash